August 23, 2024 — Adept Product Team

Adept Workflow Language (AWL) is an expressive, custom language that allows users to easily compose powerful multimodal web interactions on top of Adept’s models.

August 23, 2024 — Adept Product Team

Adept Workflow Language (AWL) is an expressive, custom language that allows users to easily compose powerful multimodal web interactions on top of Adept’s models.

Here at Adept, we define AI agents as “software that can translate user intent into actions.” We envision a world in which AI can assist users in everything from the handling of complex, taxing tasks to executing a high volume of rote chores — all in service of freeing up a user’s time and headspace.

Building our agent requires powerful multimodal capabilities: our agent understands the screen, reasons about what is on the page, and makes plans. Our suite of multimodal models have been trained on these capabilities from the earliest training stages. To build a truly usable agent on top of this — one that you can depend on in production — requires even more carefully-designed characteristics.

At Adept, we’ve specifically engineered our agent to be:

Key to these features is Adept Workflow Language (here on out referred to as AWL): a proprietary, custom language developed at Adept. AWL is a syntactic subset of Javascript ES6, and provides users with powerful abstractions to dictate multimodal web interactions. Users write agent workflows in AWL that directly translate to model calls. Some specific AWL functions (described in the next section), also allow users to write instructions in natural language, which our model subsequently translates into detailed AWL.

AWL is a powerful tool for shaping agent behavior, allowing us to use both natural language or prescriptive commands for varying degrees of expressiveness and flexibility.

Today, we’ll show you how to use it!

With AWL, users can utilize our functions — such as click(“Compose”) — to invoke complex multimodal model calls. In this case, our model will locate where “Compose” is on the screen, and generates a AWL function call that includes the bounding box coordinates — clickBox(“Compose”, [52, 75, 634, 979]) — allowing our actuation software to inject a synthetic web click to click the Compose button.

One of our functions, act(), takes natural language inputs and invokes an agent reasoning loop. The commands passed to our model via act() are used as our agent’s task prompt and it makes a step-by-step plan to achieve the goal. In essence, our agent tells itself what to do and recursively issues the same types of commands to our actuation software according to its plans.

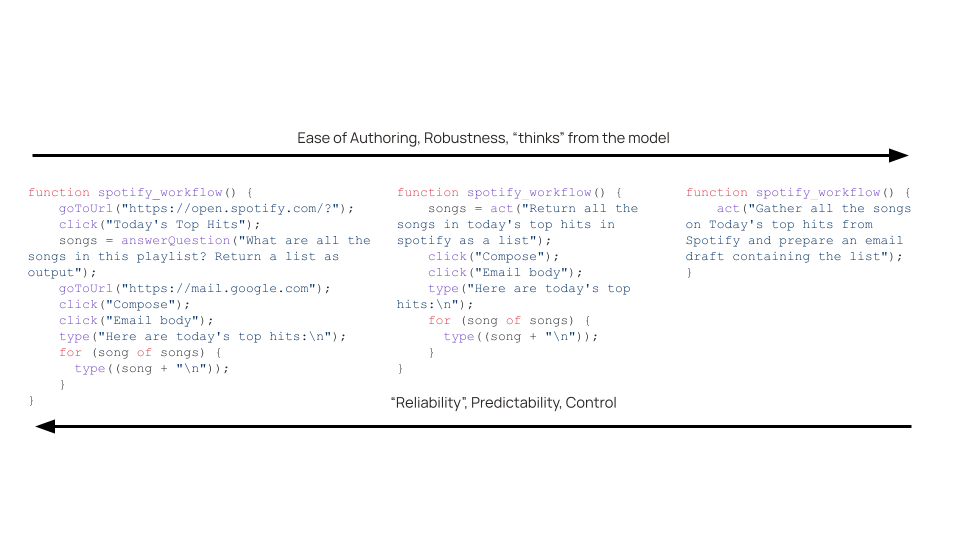

The flexibility of AWL allows us to write workflows with varying degrees of prescription and open-endedness, enabling us to choose from a spectrum of agent behavior that best suits our goal.

Let’s look at a few examples of writing an AWL workflow:

The three workflows above all effectively do the same task. The workflow on the left is the most prescriptive, and features pure functional AWL: goToURL() and click() tell the model exactly what to do. The workflow in the middle mixes this functional AWL approach with a little bit of natural language, as seen in the act() function. The workflow on the right demonstrates purely natural language workflow authoring. Moving from left to right here gives our agent more agency to judge for itself what to do — which could be useful if we expect UI to change, and need our agent to reason and make judgment calls itself, in real time.

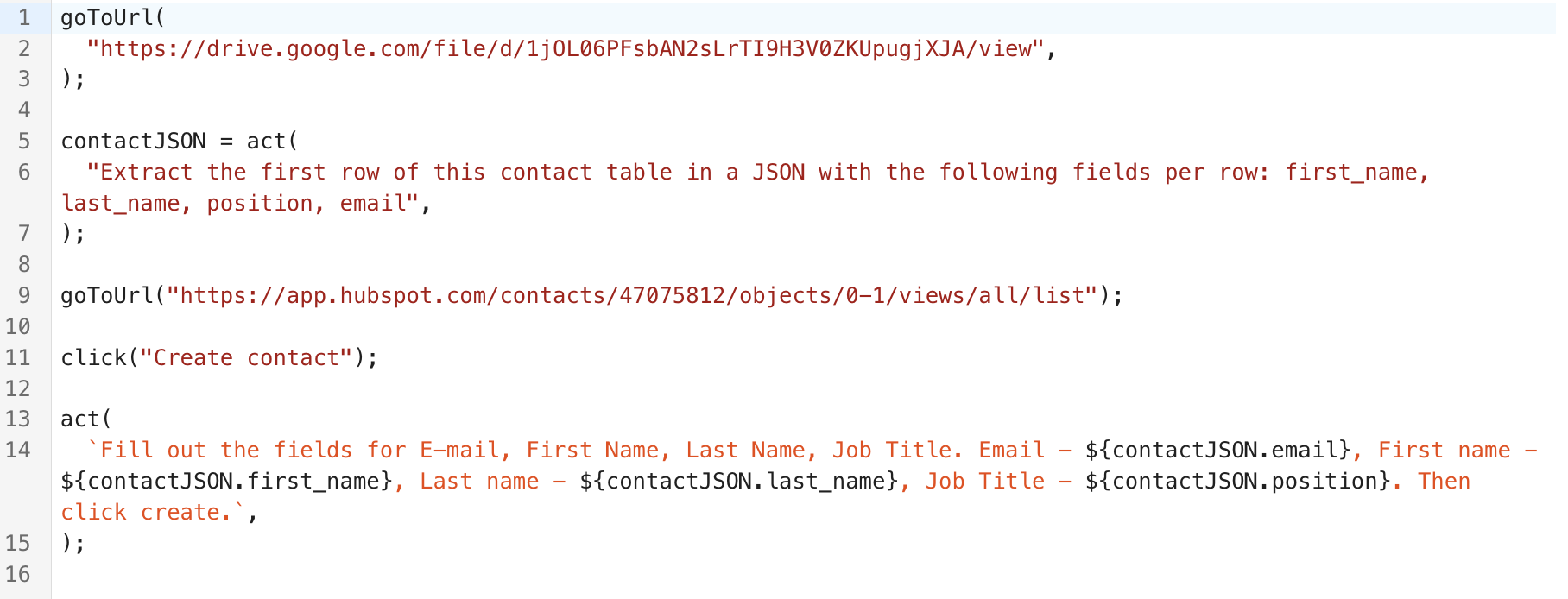

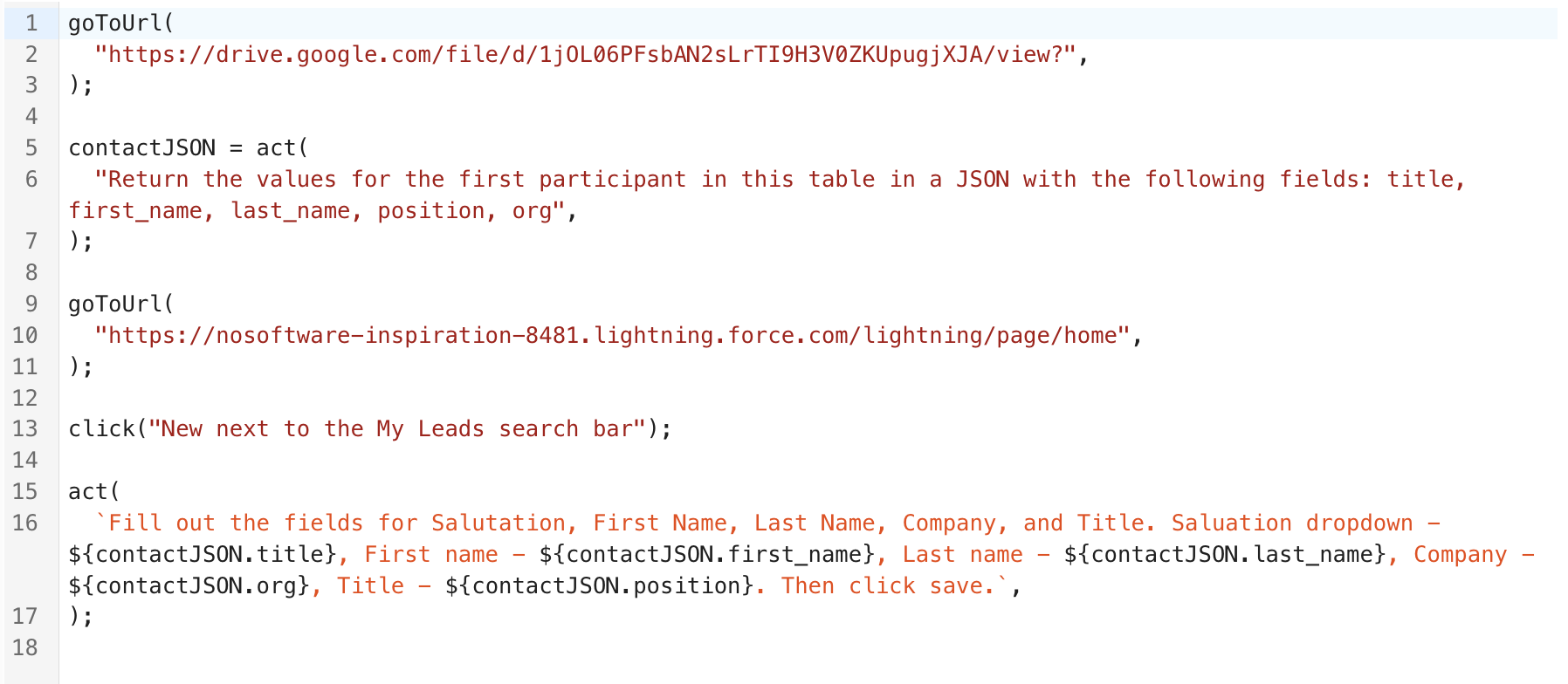

Let’s look at a real example of an end-to-end workflow:

The above workflow tells our agent to view a PDF of event attendees, take the first person from the list, and create a new Hubspot lead with their information. Watch the video on the right to see the entire workflow in action, including our agent’s “thinking” process.

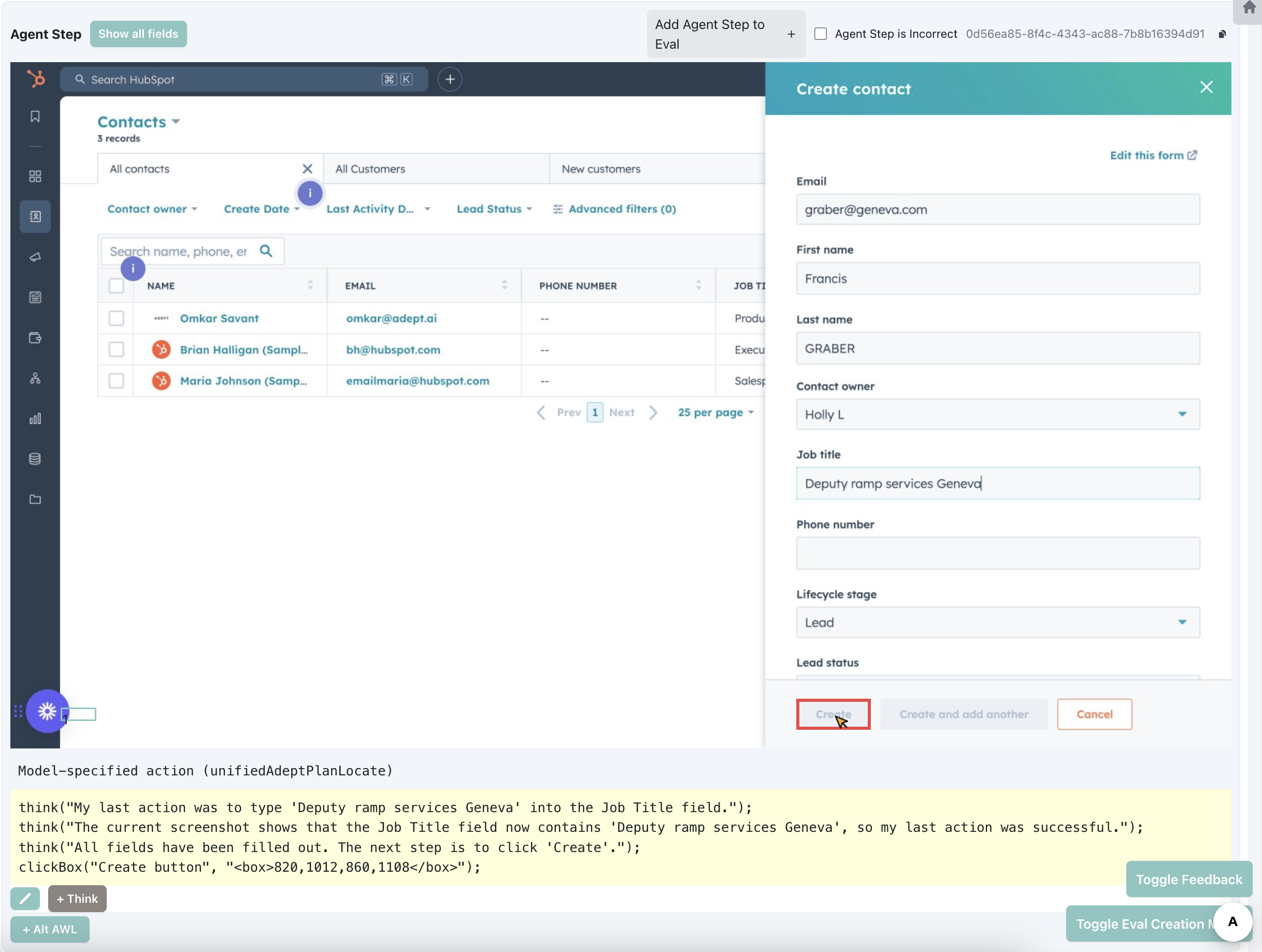

Below is a screenshot from our internal workflow viewer of exactly what our agent captures and thinks as it executes the second act() function. At this stage, the agent has successfully filled out the contact form with information extracted from the PDF. It reflects on its last action, looks at the current state of the screen, and confirms what the next step of its plan should be, including the locate command of where to click to fulfill this step.

The agent’s thinking here is not hard-coded: the agent loop invoked by act() means that our agent dynamically assesses — at inference time — the outcomes of previous steps and the implications for its downstream plan.

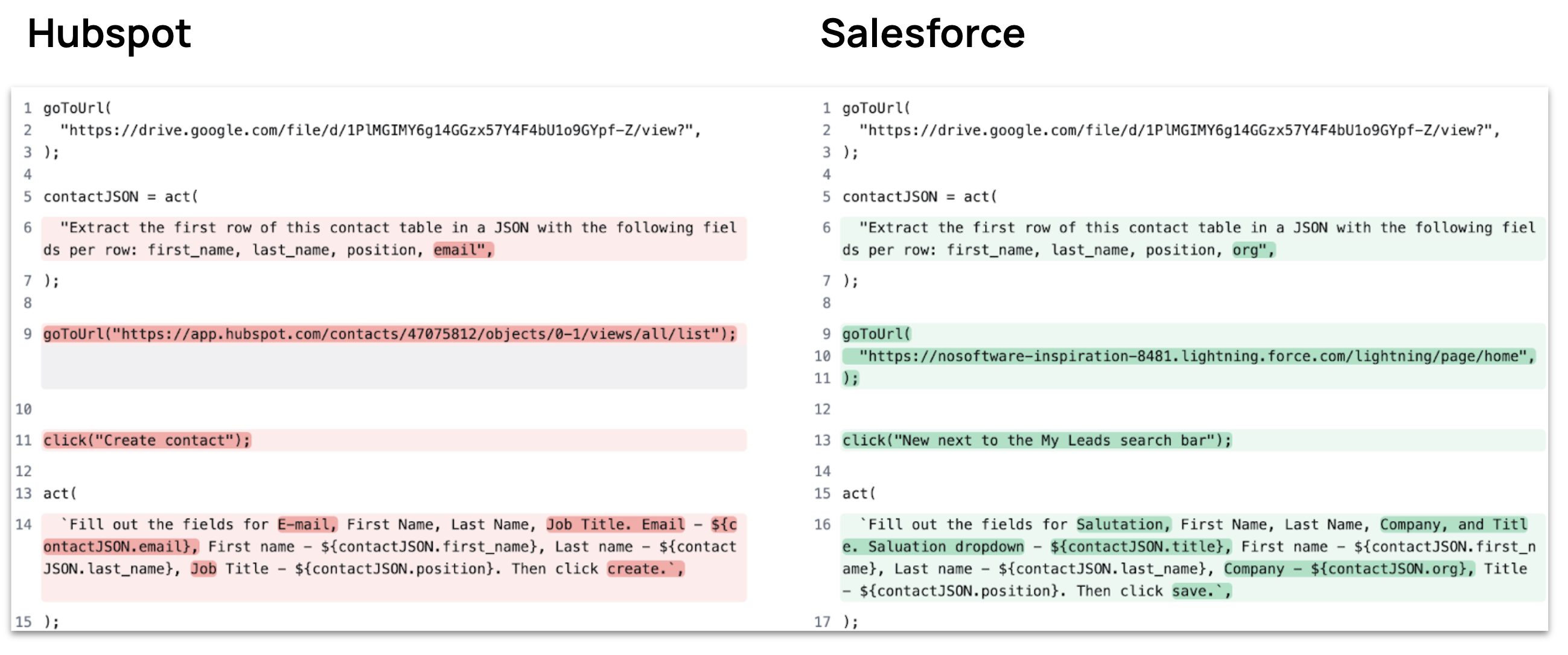

Now that we’ve got our PDF to Hubspot Lead Generation workflow working, let’s replicate it with Salesforce.

Look familiar? The above workflow tells our agent to view a PDF of event attendees, take the first person from the list – but this time, to create a new lead in Salesforce with their information. Watch the video on the right to see the entire workflow in action.

Because our agent can natively understand what’s on your screen and make plans itself, it can be remarkably robust and software-agnostic: no painstaking specific integrations needed. We can reuse our Hubspot workflow, make a few small tweaks to the AWL, and get it working for an entirely different website — all in under five minutes.

Below, we compare the AWL side by side for these two workflows. The user only needs to change the URL (from Hubspot to Salesforce), and specify a few small changes — Salesforce asks for a salutation, for example, and calls contacts “leads.”

Eager to see more demos? Scroll to the bottom of this blog post to see more exciting examples of sophisticated, cross-application workflows, easily written with AWL.

Adept’s Agents have powerful multimodal capabilities and are optimized for enterprise automations. And with AWL, users can command complex, multimodal agent automations in a simple, straightforward way.

We’re excited about the transformative possibilities that this can enable:

At Adept, we’re working on making automations easier for more people, more types of workflows, more often.

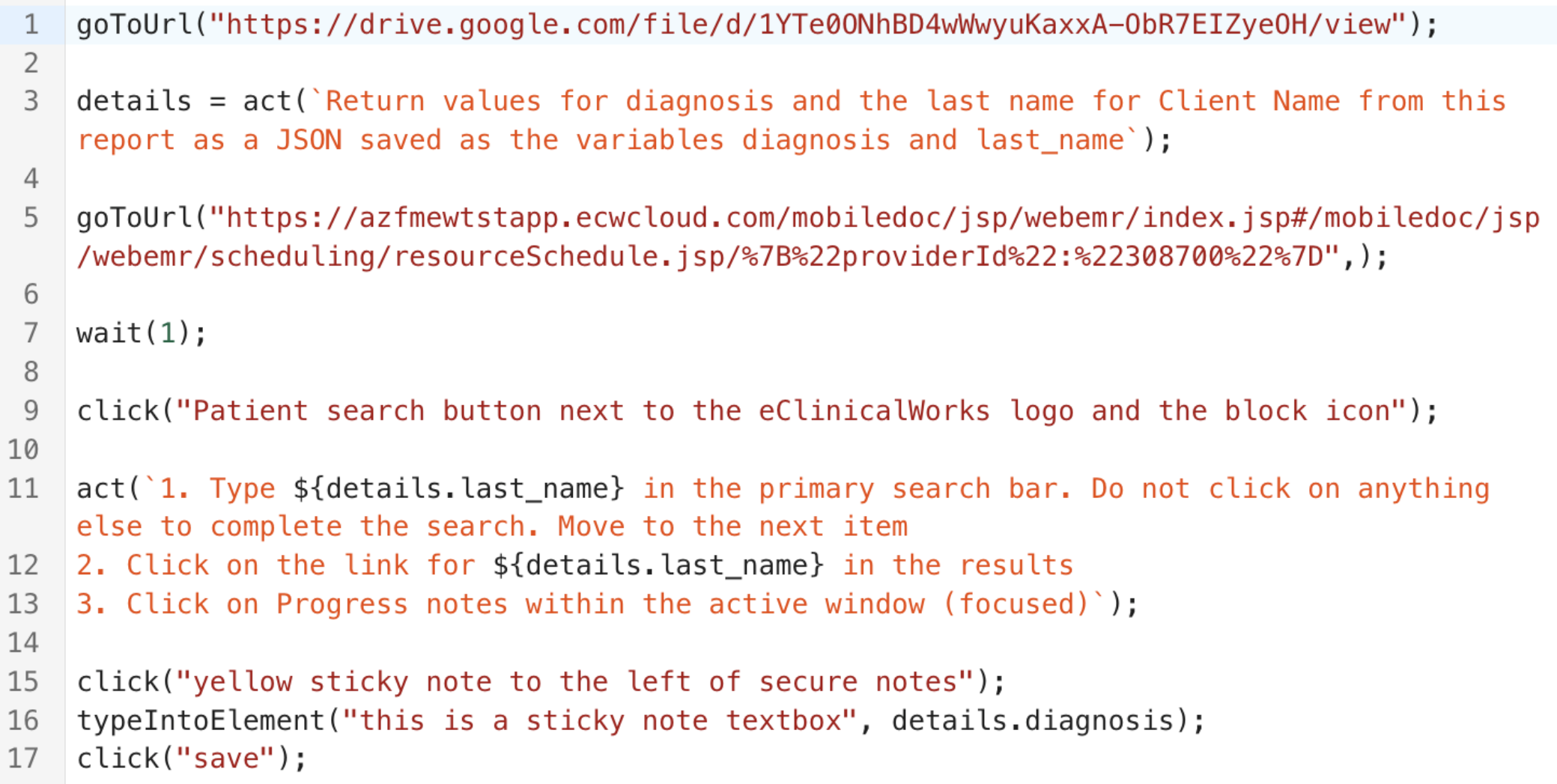

In this workflow, the agent extracts information from a PDF, searches for the patient in an Electronic Medical Records system, and updates their record with the diagnosis info they extracted.

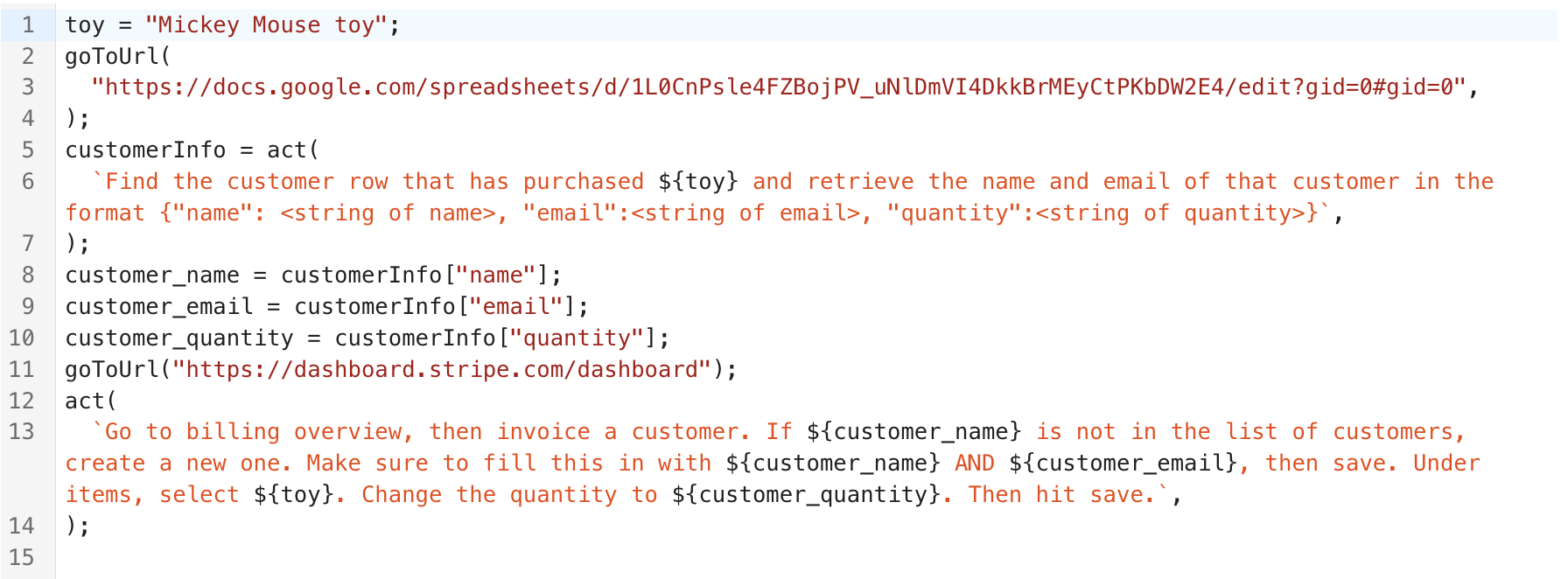

Here, the agent extracts information from a Google Sheets to understand which customer purchased a specific item, creates a new customer record in Stripe for them, and creates a new invoice.

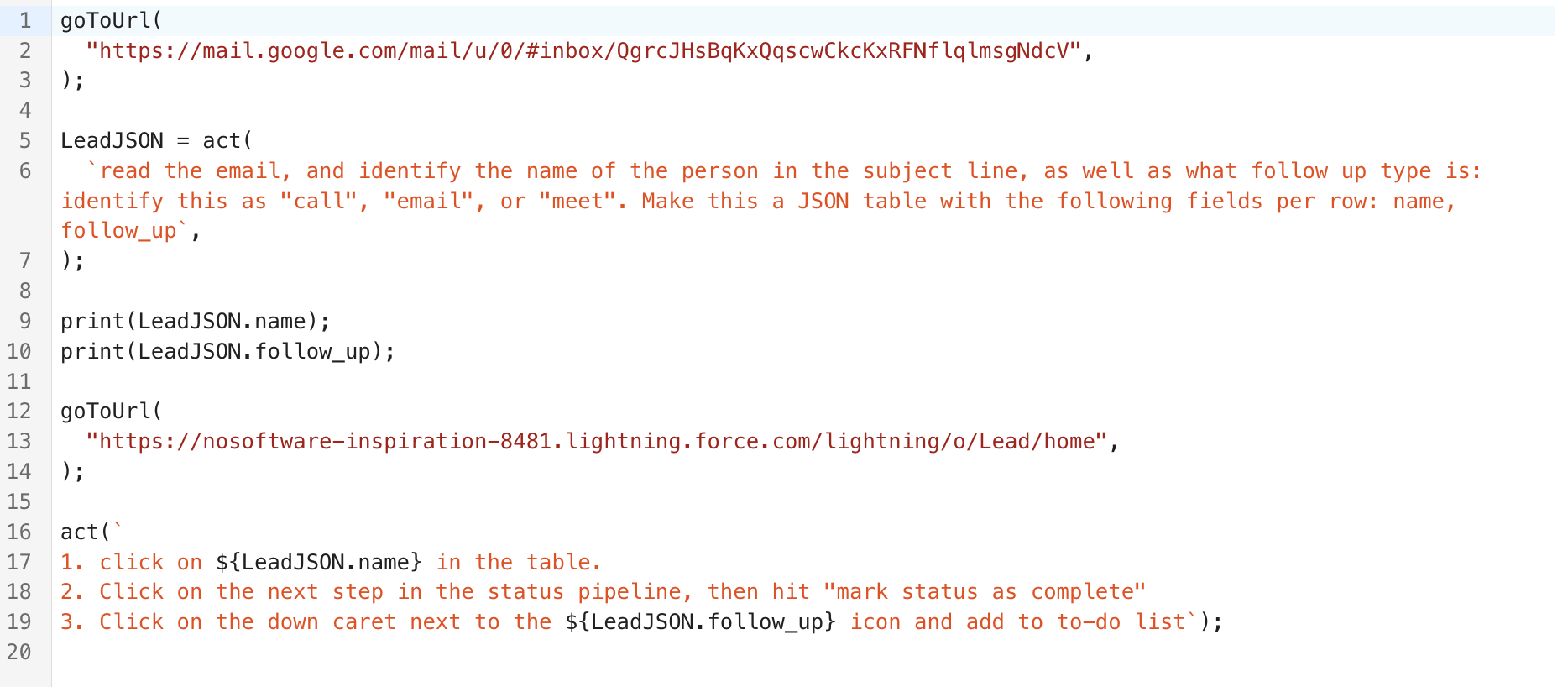

In this example, the agent triages and categorizes inbound emails. It identifies the right lead in Salesforce, updates the lead’s status in Salesforce accordingly, and even judges for itself what type of to-do to set for the team.

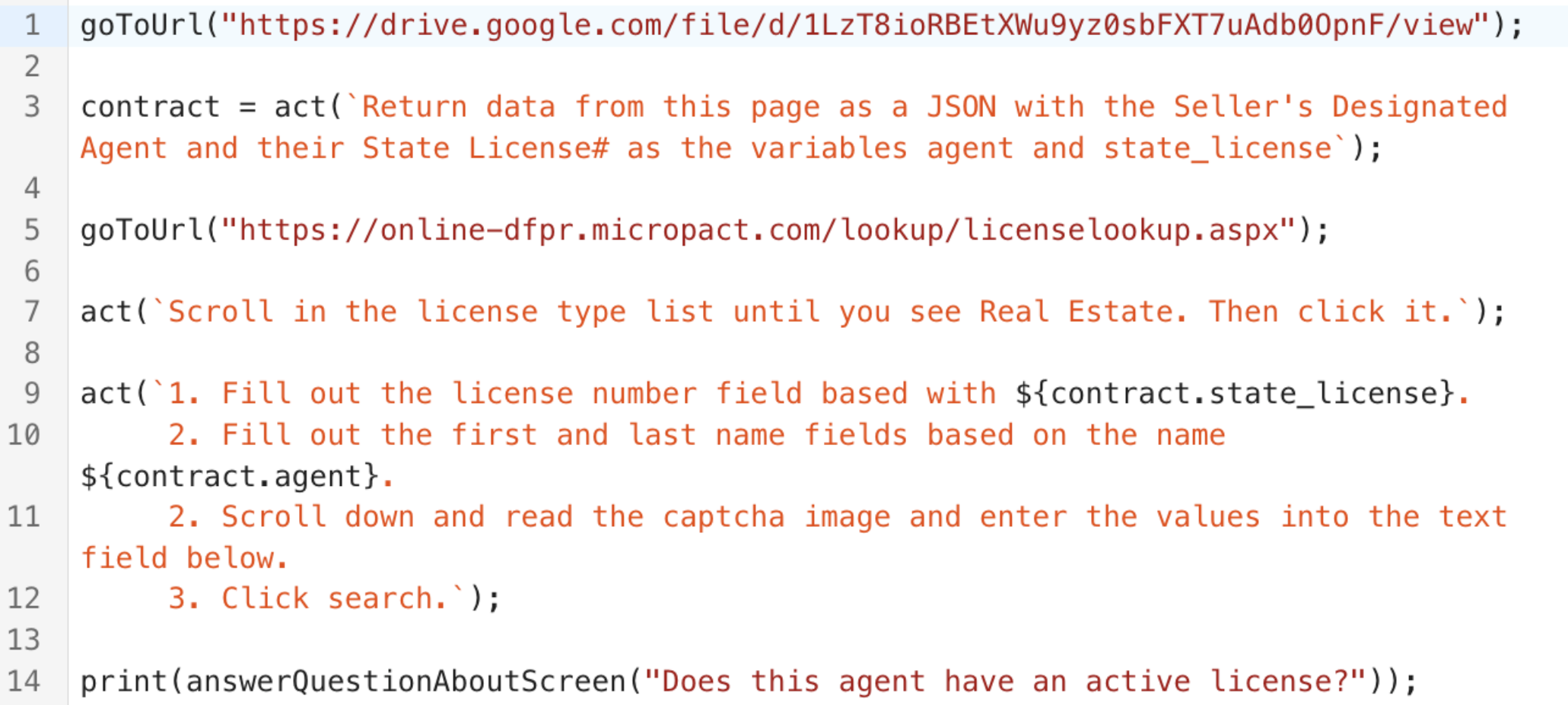

In this workflow, the agent extracts details about the specified contact from a complex contract PDF, then searches for this contact in a state database and returns whether they have an active license. Note that the agent solves a CAPTCHA in this case because it was instructed to do so.